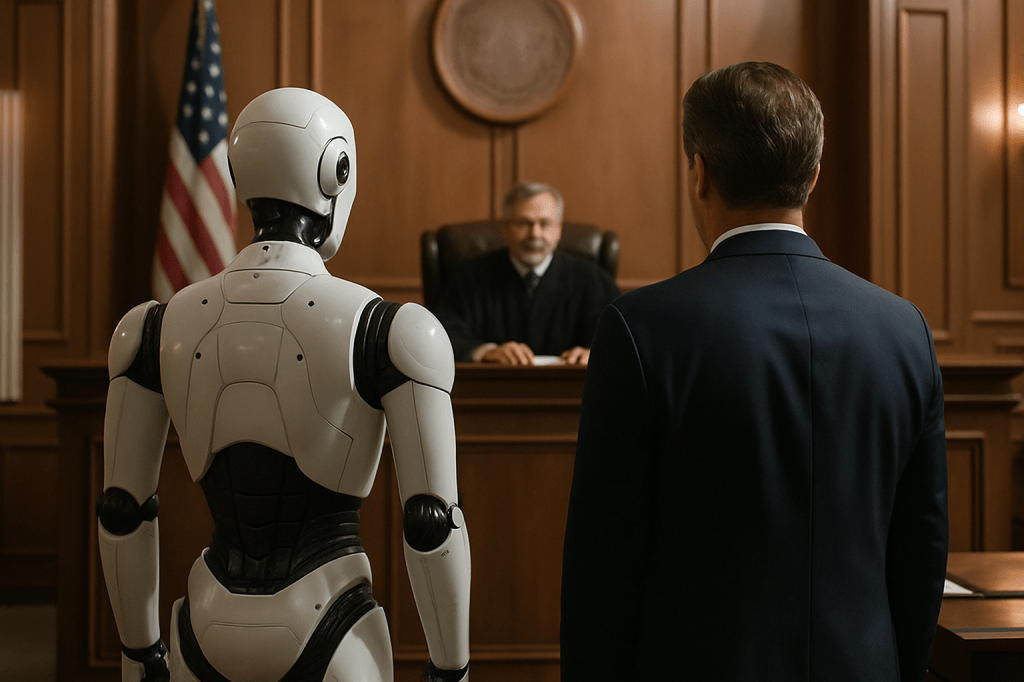

The calendar page may still show August, but October 1st, 2025, just became a critical date for anyone deploying AI in human resources. California, through its Civil Rights Council, has formalized a new regulatory framework that fundamentally redefines accountability for algorithmic employment decisions. Published in the National Law Review, these rules aren’t just another set of guidelines; they represent a significant regulatory assertion over the AI tools increasingly embedded in the hiring, evaluation, and management processes.

A New Definition of Accountability

At the core of these regulations is a subtle yet profound legal expansion: the definition of an employer’s “agent.” Historically, liability for employment discrimination rested squarely with the employer. Now, that scope broadens to encompass any entity acting on behalf of the employer, directly or indirectly, in functions traditionally exercised by the employer. This isn’t merely legal semantics; it’s a direct challenge to the traditional ‘vendor-client’ firewall.

- If a third-party AI service screens resumes, evaluates candidates, or even directs job advertisements, that provider is now, in essence, an extension of the employer’s legal entity for anti-discrimination purposes.

- This shifts the onus, forcing employers to demand unprecedented transparency and compliance from their AI toolchain suppliers. It means less ‘plug-and-play’ and more ‘audit-and-verify’ when it comes to AI procurement.

Algorithmic Decisions Under Scrutiny

The rules specifically apply existing anti-discrimination laws under the Fair Employment and Housing Act (FEHA) to “automated decision systems.” This covers a wide array of AI tools currently in use, from initial candidate sourcing to performance evaluations and even internal mobility recommendations. The era of AI operating as a black box in HR is formally ending in California.

- Employers must now ensure their AI applications do not disproportionately impact protected groups. This requires rigorous, ongoing auditing of algorithms for bias, not just at deployment but throughout their lifecycle.

- Transparency is no longer a best practice; it’s a regulatory expectation. Employers will likely need to articulate how AI tools influence decisions and be prepared to justify their use.

Beyond Compliance: The Broader Implications

While the immediate focus for California employers will be legal compliance and mitigating liability, the deeper implications ripple across the entire AI development and deployment ecosystem. This isn’t just about avoiding lawsuits; it’s about shaping the future of responsible AI.

- For AI Developers: The pressure is now on to build “fairness by design” into their algorithms, with clear audit trails and explainable AI features becoming competitive necessities, not just ethical aspirations.

- For Employers Nationwide: California often serves as a regulatory bellwether. What starts here frequently influences policy in other states and potentially at the federal level. This signals a future where AI governance isn’t abstract but concretely tied to employment law.

- For the Future of Work: These regulations acknowledge that AI isn’t just a tool; it’s an active participant in decision-making that profoundly affects individuals’ careers. They underscore a growing societal expectation that technological advancement must not come at the cost of fundamental human rights and protections.

The path forward demands a proactive approach: regular AI system audits, clear internal policies, and a collaborative dialogue with AI vendors. The era of AI-driven HR is certainly here, but now, its operation is subject to a new level of scrutiny, demanding accountability from every link in the chain.