The Weekend the Law Put a Price on Human Judgment

By Saturday morning, the story bending conversations across boardrooms and group chats wasn’t a product launch or a splashy layoff. It was a forecast. At Gartner’s HR Symposium in London, analysts floated a blunt prediction that Business Insider published the day after: by 2032, at least 30% of the world’s largest economies will codify “certified human quotas” into law. Not voluntary guardrails, not policy PDFs for the intranet—statutes that require proof that a minimum share of essential work remains in human hands. The phrase is clinical, but the implication is not. If it holds, the decade ahead won’t simply be about how quickly AI replaces us. It will be about how the state decides to allocate work between people and machines.

From Policy to Mandate

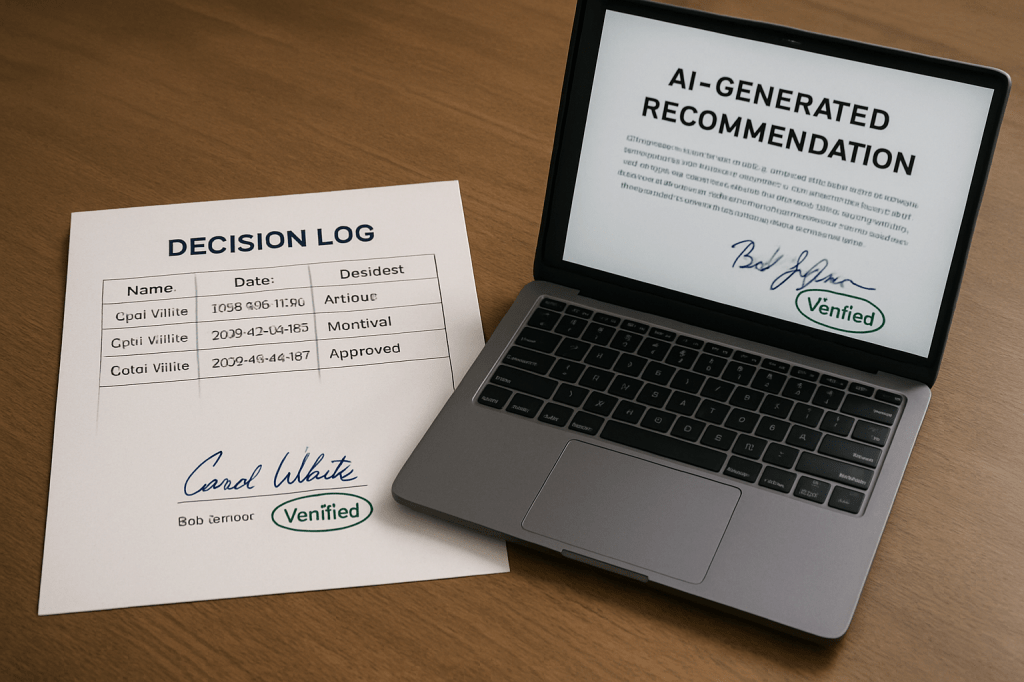

Gartner’s call reframes a familiar idea. “Human in the loop” has lived for years as a best practice and PR posture. Engineers used it to signal caution. HR used it to soothe. But a quota is different. It moves the locus of power from corporate policy to public enforcement. It says that for critical processes—hiring, performance judgments, safety-sensitive operations, regulated outputs, high-stakes creative claims—companies must not only involve a person, they must be able to prove it with names, timestamps, and a lineage of who decided what. That flips the burden of proof. It is no longer enough to say, “We use AI responsibly.” Under a quota regime, the absence of a verified human becomes a compliance failure.

What a Quota Actually Changes Inside a Company

If you run a workflow map today, the natural question is “where can we replace?” Under quotas, the question mutates into “where must we reserve?” The distinction sounds semantic until you start drawing boxes. Every critical path will need human-performed stages designed in. That means job descriptions rewritten to include responsibility for review and sign-off, systems instrumented to capture provenance and chain-of-custody, and a new class of roles that exist not to create from scratch but to bear accountability for what machines propose.

Imagine a credit decision in a bank. The model assembles the file and a recommendation. A named person—licensed, trained, and traceable—reviews, challenges, and either approves or declines. The system records not just the final click but the fact of deliberation: which inputs were consulted, what changes were made, what was overridden. An audit weeks later doesn’t ask the model to explain itself; it asks the bank to prove that a human with authority took the risk. Multiply that logic across safety logs, HR performance ratings, marketing claims, and clinical triage notes. Quotas don’t just slow the march of automation; they make “who did the deciding” part of the product.

The Measurement Problem

Beneath the headline prediction lies a practical thorn: how do you measure “human enough”? If a reviewer skims an AI draft and accepts it, is that compliance or theater? Regulators are already drifting toward language about meaningful oversight rather than mere presence, but meaningfulness is a design problem, not a slogan. It demands evidence. That is why provenance keeps surfacing in this conversation. Watermarks, citations, and decision logs are not window dressing; they become the substrate for proving that a person engaged with the material in defensible ways.

Expect a predictable temptation toward “paper humans,” placeholders who do the minimum to satisfy a dashboard. If quotas arrive, the line between legitimate oversight and box-ticking will become a contested frontier. Vendors will sell attention metrics, diff tools, and approval workflows as proof of depth. Auditors will probe for the difference between a rubber stamp and a real intervention. The firms that get this right will design for contestability—mechanisms to push back on model outputs—and make that contest visible.

Labor-Market Math

Quotas act like an employment stabilizer. They don’t reverse automation’s logic, but they put a floor under the demand for judgment. That floor isn’t abstract. It is the number of reviewers, approvers, stewards, and accountable signatories you must carry to run your core processes legally. The first-order effect is obvious: pink slips slow where full automation would have been cheapest. The second-order effects are subtler. Oversight skills gain value. People who can interrogate an AI’s output, cite the right standards, and take responsibility for decisions will command a premium in high-risk domains. At the same time, there is a risk of carving work into narrow approval fragments that deskill the job and compress wages. Whether quotas dignify human agency or relegate humans to “compliance janitors” will depend on how companies design the work around them.

Strategy Shifts for the AI Stack

If this forecast lands, “quota-ready” becomes a procurement criterion. Tools that natively track provenance, support human override, and produce auditable trails will beat shinier but opaque alternatives. RFPs will ask how a system proves that a person with the right authority reviewed an output, not just that a user looked at a screen. Expect a wave of integrations between model providers and governance layers that record lineage, enforce segregation of duties, and surface accountability chains on demand. This is less about fear of rogue AI and more about making regulator conversations short and boring.

Geography will matter, too. Firms may be tempted to move quota-heavy steps to jurisdictions without mandates, but supply chains rarely let you launder accountability. If your buyers, insurers, or sectoral regulators impose human thresholds, the requirement rides with the work. We have seen this movie with privacy and safety regimes: when major markets set the bar, compliance leaks across borders via contracts and audits. The likely equilibrium is messy uniformity—companies standardize on the strictest plausible requirement to avoid running parallel processes.

The Cultural Trade

There is a deeper current running beneath the compliance mechanics. Quotas are a value statement masquerading as regulation. They say that certain decisions should be made by people because they affect people, even if a machine could approximate the same outcome faster. That stance has a cost: it reduces some efficiency and introduces friction in the name of legitimacy. It also carries a danger. If human involvement is reduced to a timestamp, it erodes the very trust it seeks to protect. The cultural work ahead is to ensure that human oversight feels like authorship and accountability, not a prop placed in front of a black box.

Why This Forecast Matters Now

The timing is not accidental. HR leaders are setting next year’s priorities while a generation of tools promises to dissolve whole categories of work. Gartner’s forecast, amplified by Business Insider, gives them a concrete planning constraint two cycles out. You can already see the checklist forming without turning it into an actual list: map the processes that, if automated end-to-end, would invite legal or reputational risk; build provenance into the plumbing; pilot oversight roles that have teeth; and treat headcount reductions as reversible only where you can defend the absence of a human. The companies that start logging, labeling, and allocating accountability now will not scramble later to invent proof after the fact.

The Countdown to 2032

Predictions are not policy. Legislatures can stall, regulators can narrow scope, and industry can self-govern convincingly enough to blunt mandates. But this one has momentum because it speaks to a basic democratic instinct: keep humans responsible for decisions that govern other humans’ lives. For readers of this newsletter, there is a twist. If you feared that AI would quietly replace you, the more likely near-term scenario is that the law will loudly reserve some of your work—then require you to prove you did it. The contest of the next seven years won’t be whether humans matter. It will be whether we can show, with evidence, when and how we mattered.