The Day a Headline Turned a Thesis into a Taunt

Sunday didn’t deliver a discovery, a dataset, or a new model. It delivered a sentence. “Not real work” escaped its original habitat and marched straight into the public square, carrying Sam Altman’s name like a banner. A single line—packaged as a judgment about obsolete jobs—was recast as the argument itself, and a weekend without much hard news suddenly had a protagonist and an antagonist. If you work anywhere near the frontier of automation, you could feel the attention reorienting in real time: not to performance metrics or adoption curves, but to the meaning of the word “work” and who gets to define it.

What Altman Actually Said

The remark wasn’t born as a dismissal. Earlier in October, Altman used a farmer’s gaze as a rhetorical device. Imagine a farmer from a few generations back, he suggested, peering at our laptop-bound routines and deciding that what we do isn’t “real work.” In that telling, “real” has always been a moving target. He added the point that future roles may make today’s office jobs look similarly insubstantial, and he nodded to the need for new social supports through the transition. The claim—whatever you think of it—was about reclassification over time, not contempt for people whose tasks are next in the automation queue.

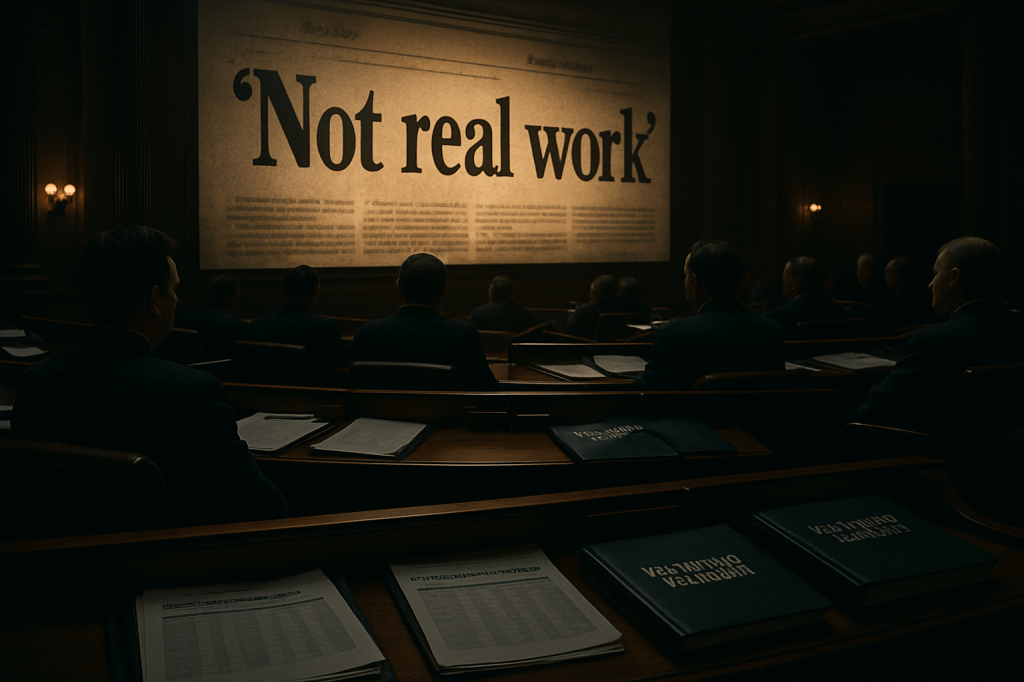

How the Quote Became the Story

Then came the October 26 write‑up that promoted the most combustible fragment to the headline and let it stand in for the whole thesis. It was excellent fuel: a memorable, adversarial line that fit inside a phone screen and leveled easily into outrage or defense. The nuance of historical perspective and policy caveats could not compete with a crisp label that sounded like a verdict on present-day workers. By afternoon, the quote had been excerpted, memed, litigated across forums, and lodged firmly in the brainstem of the AI-and-jobs conversation.

Why This Moment Matters

We don’t usually cover media framing as the “big story,” but yesterday’s cycle was itself a form of power. When you compress a thesis about shifting definitions into a charge of disrespect, you do more than irritate a CEO. You shift the policy window. If the loudest reading is “tech thinks your job isn’t real,” then the counter-moves harden: bans, moratoria, procurement hurdles, punitive optics. If, instead, the headline had foregrounded the implied policy questions—who carries the risk during the reallocation of tasks, and how do we fund a new social contract at the speed of diffusion—the debate would slide toward implementation rather than identity.

The Quiet Claim Inside the Noise

Altman’s underlying proposition isn’t new, but it is sharper in an era when the half-life of job descriptions is shortening. He is asserting that the human appetite for purposeful activity remains intact even as particular bundles of tasks are dissolved by software. That’s not quite the same as saying “it will all work out.” It is a bet that society can relabel, retrain, and re-incentivize quickly enough to keep meaning and livelihoods coupled. The bet becomes risky when the cadence of change outpaces retraining capacity, when credentialing lags new forms of productivity, or when income supports are calibrated for a previous tempo.

Two Questions the Headline Hid

The first is tactical: what cushions should exist while tasks are reallocated? If generative systems consolidate ten roles into three, the delta is not an abstraction. It lands on households, leases, and debt. Wage insurance, on-ramps into adjacent occupations, portable benefits, and employer-funded transition budgets are not high-minded philosophy; they’re the difference between a bridge and a cliff. The second is structural: what mechanisms constitute a new social contract if the transition accelerates? Retraining that actually leads to placement, income supports that clear rent, and credentialing that recognizes competency rather than seat time are not optional if “work” is going to be redefined without tearing social fabric.

How Language Becomes Infrastructure

Yesterday showed why rhetoric is not cosmetic in this domain. The vocabulary we use to describe disappearing and emerging tasks becomes the scaffolding for rules, budgets, and corporate commitments. Say “not real work,” and you harden identities; say “task reallocation,” and you open spreadsheets. The framing also telegraphs priorities: if leaders emphasize meaning while skirting income volatility, workers hear an offer to swap a paycheck for a pep talk. Conversely, if critics insist any redefinition is inherently demeaning, they foreclose the possibility of better jobs on the other side. Precision isn’t politeness; it’s policy.

Risk, Return, and the New Social Ledger

There’s an unspoken accounting running beneath the debate. The near-term returns of automation accrue to firms and customers; the near-term risks accrue to workers whose skills are suddenly over-supplied. Bridging that mismatch is the whole ballgame. You can do it with taxes, with corporate covenants, with sectoral bargaining, with procurement standards that require transition plans, or with some mix that matches political reality. You cannot do it with vibes. If anything, yesterday’s flare-up is a reminder that we lack a shared grammar for converting productivity gains into transition guarantees at the speed of software.

The Leadership Trap—and the Exit

For AI executives, the trap is predictable. Any argument about historical drift in the meaning of work will be heard by some as minimizing present pain. The exit is not to stop making the argument, but to pair it with specifics that are hard to strip of context. Name targets for funded retraining tied to actual placement. Publish attrition and redeployment rates tied to automation. Commit to timelines for internal upskilling before external layoffs. When the nouns are concrete, the quote economy has less oxygen.

What Yesterday Tells Us About Tomorrow

The most interesting signal wasn’t that a contentious framing went viral on a slow Sunday; it was how quickly it crowded out the policy substance that the original remarks at least gestured toward. That’s a preview. As more organizations move from pilot to platform, the disputes won’t be about model tokens or benchmarks; they will be about definitions, labels, and the moral valence of job redesign. If you are an employer, treat that as a governance problem, not a marketing one. If you are a policymaker, assume the headlines will chase the sharpest phrasing and write statutes that survive it. If you are a worker, the lesson is grim but useful: the case for your next role will be won in credentialing standards and placement pipelines, not in comment threads.

The Sentence That Sold a Sunday

In the end, the quote was real, but it was a synecdoche that ate its source. A thought experiment about how definitions shift became a referendum on respect. That’s on all of us—publishers, platforms, leaders—to fix. Because if the language keeps outrunning the policy, the next “not real work” won’t just be a headline. It will be a budget line, a pink slip, and a missed chance to turn diffusion into dignity.