Congress Just Put a Clock on “AI Made Me Do It”

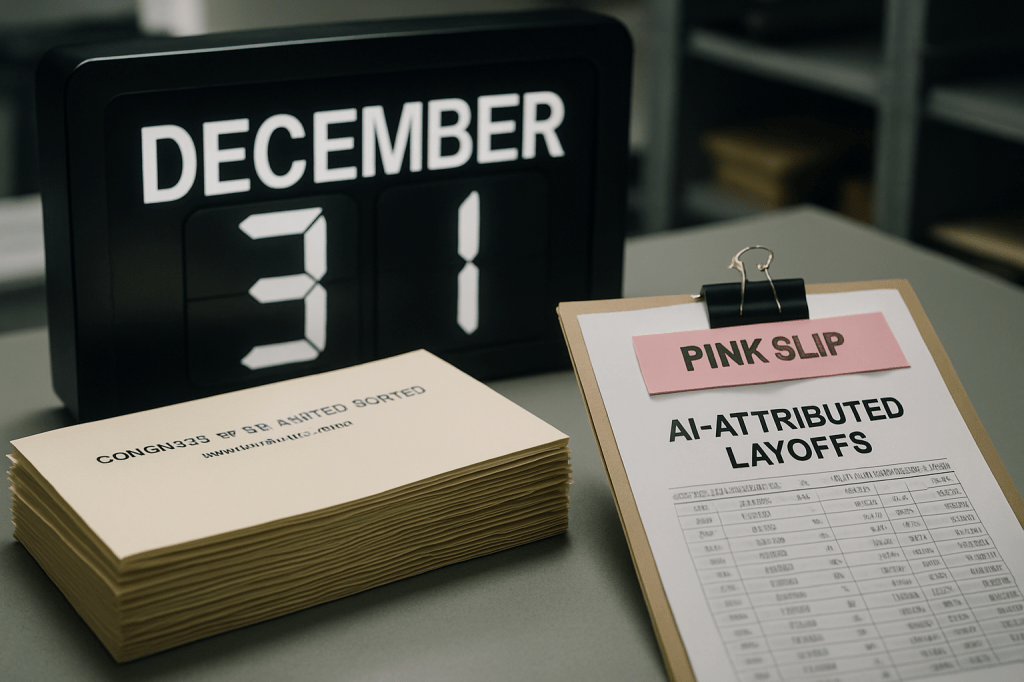

On Thursday, a stack of identical envelopes began landing in the mailrooms of America’s largest employers. Inside each one: a polite but pointed question from Rep. Valerie Foushee, who chairs the New Democrat Coalition’s AI Task Force. How much of your 2025 layoffs were actually caused by AI? Not in headlines, not in vibes—counted, attributed, and explained. The companies—Amazon, Salesforce, Meta, Verizon, Microsoft, IBM, Google, Accenture, HP, Intel, Target, UPS, Synopsys, Lenovo—were given a year-end deadline. The clock is now running.

For years, the labor story around AI has floated in a haze of anecdotes and euphemisms—“efficiency,” “realignment,” “focus.” Foushee’s letters aim to puncture that fog with numbers. She’s asking for a breakdown of layoffs tied directly to AI adoption or AI-driven restructuring, the demographics of who was affected, and how workers were told their jobs were disappearing. It’s a simple request with a complicated core: prove the role of the algorithm in the pink slip.

From rumor to record

The backdrop is a new figure that won’t fit neatly into a press release. Challenger, Gray & Christmas reports that through November, employers attributed 54,694 announced U.S. job cuts to AI. That’s enough to get Congress’s attention, but not enough to show where the blow actually landed or who bore it. Foushee’s move is to drag the conversation down from the cloud layer to the factory floor, the call center, the back office—company by company, decision by decision.

Measurement changes behavior. If firms must specify how an AI tool didn’t just assist but displaced, they will need definitions, baselines, and a chain of custody for each decision. Once those numbers exist, they won’t stay confined to a letter. They migrate into earnings calls, contractor certifications, union bargaining tables, and, eventually, filings. Investors will ask why one firm’s “AI-attributed” ratio looks nothing like a rival’s. Procurement officers will wonder whether a bidder’s cost advantage came from software that shifted risk onto a specific community. The act of counting becomes an act of governance.

The attribution problem

That governance starts with a thorny question: what does it mean for AI to “cause” a layoff? A chatbot that absorbs Tier 1 support might directly delete roles. A code assistant that lifts engineering throughput by 30% could let a manager meet goals with fewer hires—no termination, but a workforce that quietly shrinks. A forecasting model that enables a restructuring might erase jobs without any one model clicking the trigger. None of this is clean, and the temptation to pick the definition that minimizes scrutiny will be strong. Good measurement will require firms to separate direct role elimination from task displacement and from “notional” reductions like avoided hires. It also demands a time axis: did AI enable the change this quarter, or merely accelerate an inevitable plan?

Expect fights over double counting and causation theater. But also expect a new internal market for evidence. HR, finance, and data teams will need a ledger tying process changes to headcount outcomes, along with model cards, pilot logs, and before-and-after productivity studies. The winners in this compliance sprint won’t just be the lawyers; they’ll be the operators who can instrument AI’s effects with the same rigor they use to track revenue.

Who pays the invisible tax

The letters don’t stop at attribution. They ask who, demographically, is being shown the door. That choice is not incidental. Recent labor softness has fallen hardest on Black workers, and Congress is signaling that aggregate counts are inadequate if the burden clusters in predictable places. Pairing AI attribution with a distributional lens reframes the issue from macroeconomics to civil rights risk. If a company’s AI-enabled restructuring has a disparate impact, the next step isn’t just reskilling grants—it’s oversight from agencies that already police discrimination. The data Foushee is requesting could be the bridge between tech policy and long-standing workplace law.

The lever behind the letter

This isn’t an isolated missive. The New Democrat Coalition’s earlier Innovation Agenda centered middle-class workers and reskilling, and two days before the letters went out, House Democratic Leader Hakeem Jeffries named Foushee co-chair of a new commission on AI and the innovation economy for 2026. Translation: the answers won’t vanish into a staffer’s inbox. They are raw material for hearings, model legislation, and—if firms stonewall—subpoenas or rulemaking to standardize disclosure.

Standardization is the endgame. If companies offer precise methodologies, Congress can harmonize them into a reporting framework that looks suspiciously familiar to those who lived through the rise of climate and cyber disclosures: define the scope, require a narrative of controls, and align procurement and grants to favor firms with credible accounting. If responses are vague, that only hardens the case for mandatory rules. Either way, the “AI attribution standard” just began its origin story.

The corporate calculus

Behind closed doors, comms teams will want to claim that AI merely “assisted,” HR will worry about WARN Act implications, and counsel will see disparate-impact exposure if demographic cuts track too closely to certain functions. The safest path—minimize, deflect, delay—also invites escalation. The more strategic path is to over-instrument: distinguish task displacement from role elimination, separate automation savings from macro cuts, document alternatives considered, and show how savings funded wage growth, new lines of business, or retraining. It won’t erase the pain, but it converts a liability into a story about intentional transition rather than opportunistic trimming.

The market that appears overnight

Where policy draws a line, startups build to it. Expect a new category to crystallize: AI labor impact accounting. Think software that tags workflow changes to headcount deltas, emits attestations for auditors, and produces demographic fairness analyses alongside productivity metrics. Consultancies will bundle change management with model governance. Vendors whose products delete tasks will face pressure to ship “impact dashboards” the way cloud providers shipped carbon calculators. In procurement-heavy sectors, an “AI impact statement” may become a bid requirement sooner than founders expect.

What’s at stake

There is a narrow window where the narrative around AI and work can shift from fatalism to stewardship. Foushee’s letters do not decide whether jobs move; they decide whether the movement is legible. Legibility invites choices: targeted reskilling credits where the data shows concentrated harm; contract conditions that bar firms from externalizing costs; disclosure norms that let workers and investors price the trade-offs. Without legibility, we’re left with press releases on one side and resentment on the other.

On December 11, Congress didn’t ban anything. It did something more subversive: it required receipts. By December 31, we’ll learn whether the country’s most powerful employers can tell a coherent story about how AI touched their payrolls—or whether governing this technology will begin, as it often does, with subpoenas and a blank spreadsheet.